Cache Oblivious Algorithms

Introduction to Cache Oblivious AlgorithmsPermalink

Cache oblivious algorithms are the algorithms that performs well even when they are not aware of the caching model.

The Cache ModelPermalink

We assume two level memory: cache and main memory. The block size is . The size of the cache is . The size of main memory is assume infinte. If we want to access some data that is not in the cache, we need to pull the whole block that contains the data to the cache first. And we might kick something else and we need to write it back to memory.

- Accesses to cache are free, but we still care about the computation time (work) of the algorithm itself.

- If we have an array, it might not be aligned with the blocks. So it will be some extra things at the beginning and the end that doesn’t consume a whole block but we need the extra 2 blocks.

- Count block memory transfers between cache and main memory. (number of block read/write) Memory Transfer is a function of , but , are parameters and does matter.

Cache Oblivious AlgorithmPermalink

- Algorithms don’t know and

- Accessing memory automatically fetch block into memory & kick the block that will be used furthest in the future (idealized model)

- Memory is one big array and is divided into blocks of size

Note: if the algorithm is cache oblivious and is efficient in the 2-level model, it will be efficient for k-level model (L1, L2, L3, … caches)

Basic AlgorithmsPermalink

- Single Scanning

Scanning(A, N) for i from 0 to N visit A[i]e.g. sum array.

For +2, see the second point in the cache model.

- parallel scans

reverse(A,N) for i from 0 to N/2 exchange A[i] with A[N-i+1]Assuming

- Binary Search We hope to get , but actually it is (At first each access corresponding to one block. At the very last few search they are in the same block)

Divide and ConquerPermalink

- Algorithm divides problem into case

- Analysis considers point at which the problem

- fits in cache ()

- fits in block ()

Order Statistics (median finding)Permalink

- Conceptually partition the array into 5-tuples

- Compute the medium of each one ()

- Recursively compute median of these medians ()

- Partition around ()

- Recurse in one side ()

AnalysisPermalink

If we assume , see what we get ( is the number of leaves of recurssion tree):

Now we assume , then number of leaves is

Matrix MultiplicationPermalink

Standard, Naive WayPermalink

Assume that is row-major, is col-major, is row-major (best possible memory layout)

to compute so total

Black AlgorithmPermalink

Recursively divide the matrix into 4 parts, each is

We assume we store matrices recursively block. So:

So we stop the recursion tree at the base case, the number of leaves is

So the total is

Static Search Tree (Binary Search)Permalink

Goal:

- Store %N% elements in order in a complete binary tree on %N% nodes

- Cut the tree in the middle level of edges so so that the each part has height and the upper part has nodes which is , and there are subtrees at the bottom, each of which has size . So there are subtrees in total.

- Recursively layout subtrees and concatenate

e.g. the index label is the order that is stored in the array.

1

2 3

4 7 10 13

5 6 8 9 11 12 14 15

Analysis: memory transfersPermalink

- consider recursive level of detail at which the size of each subtree , so the height of the subtree

- root-to-node path visits subtrees

- each subtree is in at most blocks

- So the number of memory transfers

- There exist a dynamic type of this data structure, which can do

insertanddeletein time, but it is super complicated

Cache aware sortingPermalink

- repeated insertion into B-tree, really bad!!! even worse than random access!!! which is

- binary merge sort

- -way merge sort

- divide into subarrays

- recursively sort each subarray

- merge: using 1 cache block per subarray

Cache Oblivious SortingPermalink

Actually need an assumption of the cache:

e.g.

Use -way mergesrot

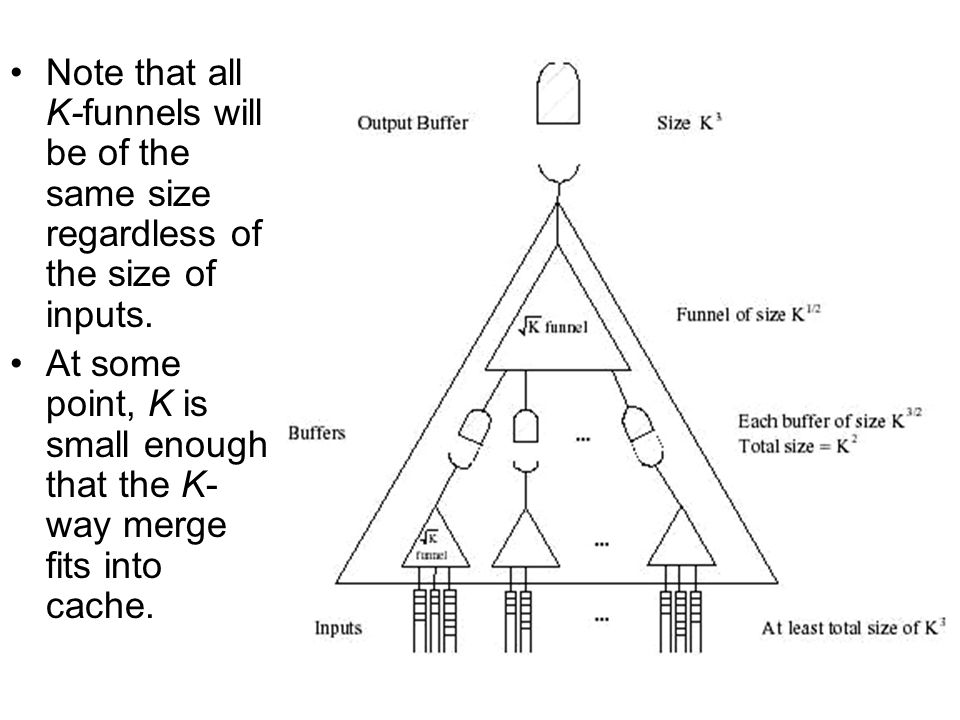

K-funnelPermalink

It merges k sorted lists of total size using memory transfers

So we have now Funnelsort. use

- divide array into equal segments

- recursively sort each

- merge using -funnel

So as long as it is not in the base case, dominates

Revisit K-funnelPermalink

First take a look at space excluding input and output buffers

So merge means to fill up the top buffer

- merge two children buffer as long as they both are non-empty

- whenever one empties, recursively fill it

- at leaves, read from input list

Analysis:

- consider first recursive level of detail, J, at which evey J-funnel fits 1/4 of the cache (It means )

- can also fit one block per input buffer (of J-funnel)

- swapping in (reading J-funnel and one block per input buffer) ()

- when input buffer empties, swap out and recursively fill and swap back in. Swapping back in cost

- charge cost to elements that fill the buffer (amortize analysis)

- such elements

- number of charges of cost to each elements is

So the total cost is

assuming

Comments